As Artifical Intelligence (AI) algorithms pervade everyday life from mortage approvals to clinical decisions to parole adjudications to email auto-completions, there has been a lot of discussion about preventing bias from creeping into these algorithms. This essay is an initial exploration about such bias.

Here's what the Oxford English dictionary has to say about the word.

Bias: Prejudice in favor of or against one thing, person, or group compared with another, usually in a way considered to be unfair.

Most of us are used to thinking of computer programs as inanimate - even cold - pieces of logic. We've know that computer programs and algorithms have long been blindingly faster than us humans. But that have yet been unable to have even the most simple feelings. How then can they demonstrate prejudice? How are they even able to discriminate for or against anything at all?

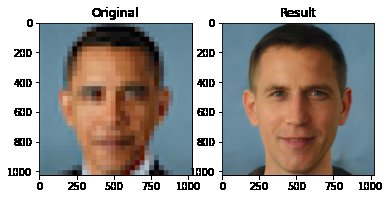

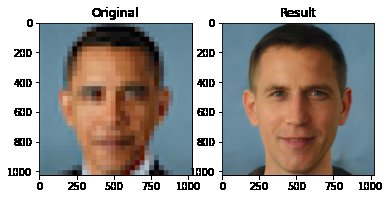

Recently, (June 2020) researchers at Duke University debuted an AI tool called Pulse that they said could take photos of people's faces that were very pixellated and, using ML (machine learning), de-pixellate them back to high-resolution and realistic faces. And it did seem to work fine in many cases. All well and good until someone tried it with a pixellated photo of President Obama and the program ended up reverse-engineering it to a white person's face! It wasn't a one-off bug: it was easily reproducible by anyone. And it wasn't just President Obama - it routinely lightened African-American or other darker faces.

How did this happen - how does a program show a predilection for light-skinned or causasian faces and features when it obviously has no concept of ethnicity or race or any of the many other divisions in human society?

I was able to gain a somewhat better understanding of such biases at work in ML after I did a couple of ML-based computer vision projects recently: Cats vs Dogs and Bird Species Identifcation. Hopefully this write-up can be a start into understanding this important topic.

The Cats from Dogs model does a really good job in the vast majority of cases where the pictures are of actual cats and dogs. However, if presented with something that looks approximately like a cat - say a carving of a cat on a pumpkin, or even Batman with his pointy cat ears - it will assume it is a cat. More interesting: when presented a picture of a Banana which we can all agree looks neither like a cat or a dog, it will still classify it as a Cat or a Dog because that is the entirety of its world view (as it were).

Now that's a binary case - everything is either a cat or a dog. A slightly more elaborate model I built to identify 200 different bird species also does quite nicely on the species it has trained on such as Bald Eagles or Swans or Peacocks etc. But it has been trained to classify only 200 types of birds. There are 10,000 bird species on our planet so the other 9800 bird species will all get forcibly classified as one of the 200 in the training set. The model will do its best to find a close match but it doesn't know what it doesn't know. If you upload a picture of a parrot, it will end up classifying it as a macaw or bunting not because it is biased against parrots but rather because it is ignorant of parrots' existence but does know about macaws and buntings. Such blind spots abound with ML classification. Here are some actual predictions including what the model thinks of, for example, a microwave oven!

Mark Twain is supposed to have said "to a person with a hammer, everything looks like a nail". In very much the same way, to an ML model that has trained on pictures of just cats and dogs, every picture is one of either a cat or a dog! To one that has trained on 200 species of birds, everything is one of those 200 species!

A model that has trained on 10+ years of recruiting data for engineering roles will think that men are more likely to succeed in engineering roles than women not because of any inbuilt misogyny within the model but rather the implicit misogyny of the recruiting decisions over the years that the data represents and which the model learns from. (1)

Stepping back a minute, let's try and see how ML and AI do what they do. How is it that in Gmail, when you begin to type in "Are you free for coffee t", it can suggest "Are you free for coffee tomorrow?"? but if you go on to type "Are you free for coffee th" then it suggests "Are you free for coffee this week?" Google isn't divining what you're thinking. What Google has done is trained an algorithm against millions, perhaps billions of pieces of email and other texts to the point that it has learned that the most likely sequence of letters or words that will complete "Are you free for coffee t" is in fact "omorrow". This, by the way, is also how when you type in "weathr in new yrok" into a Google search you are shown results for the correct spelling of your query: "weather in new york". (2)

That is to say that ML algorithms devour data and look for patterns. When asked to make a prediction (how to end a partial word or sentence for example) they rely on patterns that were gleaned from the data to make a prediction. So, their predictions are very dependent on the data that they were trained on. A dataset chosen without thought and care will pass on its preferences, characteristics and biases to the model.

Let's stick with the Gmail example for text. If Google had trained the algorithm exclusively with emails and text from, say, Great Britain, then auto-complete would make predictions with spellings such as colour and flavour rather than color and flavor. The algorithm is not expressing a preference for or a bias towards the Queen's English, it's just what it was trained on. Quite literally, it doesn't know what it doesn't know.

In the case of Duke University's face de-pixellator too, it was likely implicit rather than explicit bias that was the problem. Their research paper says that it was built off of the Flickr Face HQ, a dataset of public photos of people from Flickr. Now, while that is a collection of all public photos of people on Flickr with no filter for race, it likely had implicit bias in that there are fewer photos of black or latinx people on Flickr than there are black or latinx in the general population. As a result, an algorithm trained on the Flickr Face HQ will inherit any biases in the data - explicit or implicit. As one of the pioneers in ML and computer vision said at the time: Train the *exact* same system on a dataset from Senegal, and everyone will look African. (3)

Now, that (the Senegal comment) is true - and demonstrably so - but that fact should not absolve ML practitioners from the responisbility and importance of better understanding any potential underlying biases in the ML systems they are building and deploying. In fact it leads to a relatively simple solution for such problems: more representative data to train the model. Indeed, Rachael Tatman, a sociolinguist and AI researcher found that Google's speech recogniton had a gender bias (4) but was able to correct for it by training on female speakers and speech.

Further, many image recognition systems are built, not from scratch but off of other, more powerful models in a technique called Transfer Learning. And those models may have their own biases such that any downstream system built on top of them will have those biases transfered to itself i.e. the downstream system!

It's one thing when a research or theoretical or demo-style project has these shortcomings. It's even funny to find a model identifying a microwave oven as an emperor penguin! But when one begins to use ML/AI models in far more serious and life-impacting applications like medical trials or parole decisions, then that's when their uninformed or semi-informed use is far more worrying.

For example women have been, for decades, routinely under-represented in medical trials (and continue to be). Any model that looks at 10 or 20 years of data and diagnoses (for example: symptoms presented or responsiveness to a treatment) will generalize better to new predictions for samples that look like the training data i.e. men will get more accurate predictions than women. (5)

Similarly, racial and ethnic disparities plague the US justice system. Any system that trains on data for the decades where these inequities were even more pronounced than they are today will imbibe - and formalize - all those biases. Some racial groups are more likely to be granted parole than others. A system trained on that data will also continue to propagate that bias into its predictions for the future. This is a vicious circle. (6)

Once we see that it is possible for inanimate, unfeeling algorithms to be unable to identify bananas and microwave ovens correctly it isn't difficult to understand that these same algorithms might similarly be unable to identify the right course of action especially when dealing with under-represented groups and phenomena.

In these cases, it is extremely important for both ML researchers and ML practitioners to be aware of these potential biases and correct for them. ML/AI systems have tremendous promise and we have barely scratched the surface of all that they can improve. But it is crucial that even as we human beings cede decision-making to algorithms, we take an enormous amount of care that all the flaws of human beings and their decision-making that have been encoded into designs and data over decades - or centuries - don't get hard-wired into the algorithms of tomorrow.

With care and dedication, this is certainly do-able.

References: